The Lost Tutorial of Kafka 🎏

Intro

What I mean by the title is that I had a blog post that I started a year ago (July 14, 2022, was my first draft). First tip of the day: follow through kids. The reason I had this catching dust was probably because I was playing Zelda or something. And recently I stumble upon these ancient texts which explored the wonderful world of Kafka. Upon reading its glorious texts, I was deeply disappointed with the post. I was like: "Oh boy. Now that I look at it, this is kinda UGLY", with an awkward face of being disgusted by what I had written. But I'm still going to share it to the world. I'm opening a free and welcoming place for people to criticize my work. If you've been waiting to blow up and tell that someone sucks, this is for you pal (or maybe that's just me being my worst critic).

But jokes aside, I think this a cool opportunity to show how I handled a project a year ago and the approach I would take now. So, I'll just dump all the old stuff which I think is good enough to at least learn something, and then I'll come back with something a bit better, hopefully. For context, it's just me building some microservices using Kafka from an assignment I had a while back. BUT, I think it is better if you read the assignment and implement it yourself. Then I if you want, come back and check how I did to compare the implementation of the project. This way we can all learn more. So, I'll specify the assignment below for you to go over and then my implementation if you want to follow along with me. Now then, please enjoy: Luis Miranda and Raiders of the Lost Blog.

PS: this is for all the homies that gave me feed back when writing this blog. I just want to say that although I ignored your feedback, I'll keep it in mind for the sequel 😙. But seriously, thank you!

📂 The Assignment

About two years ago, while working for a client, there was an internal assignment related to Kafka. At the time, I and some colleagues worked on the assignment. Looking back at it now, I didn’t quite understand how to do the task. It was already challenging learning Kafka. But taking a look recently, I decided to take another shot at it.

Before laying out the assignment, I will assume that you know Spring, Kafka (basic knowledge), Docker and Databases. If not, don’t worry! I was in the same boat as you, and I enjoyed learning these different technologies. I’ll be laying out some resources for you to review and learn.

Problem Statement

🧑🏫 Design and implement a driver suggestion microservice using Spring Boot, Kafka, MySQL as a tech stack. The service should be able to store a Store’s GPS location along with store ID. The service should be able to capture the driver's lat/long. Then for a given store, the service should be able to return a list of drivers sorted by their distance from that store, i.e., the driver closest to the store should be the first entry in the list.

That’s the general idea of the problem statement. Now let’s look at some key features that the application should do:

- Service should be able to take driver’s current (latest) location via Kafka event.

{

"driverID": "m123@gmail.com",

"latitude": 27.876,

"longitude": -128.33

}

- Service should be able to take store configuration via REST API.

{

"storeID": "1234",

"latitude": 27.876,

"longitude": -128.33

}

The service should expose a GET API to fetch N drivers around a store. Store ID and N should be taken as a query parameter to the API.

- To calculate distance between store and driver’s latest location, please use straight line distance (crow-fly distance). For simplicity, you can use coordinate geometry distance calculation formula.

The service should expose a POST API to take Kafka event payload and publish in Kafka.

The service should be able to consume Kafka message from the topic even if the message is published through Kafka command line.

System Guidelines

The assignment also specified some guidelines to follow:

You are free to choose the structure of the microservice and its constituent modules which will interact with DB, Kafka, API etc.

There is a docker compose file provided along with this document. This file already has required containers for Kafka, Zookeeper and MySQL.

Zookeeper will be running at: localhost:2181

Kafka will be running at: localhost:9092

MySQL will be running at: localhost:3306

Login username = user

Login password = user123

Spring boot service should be running on port number 9080.

Use Kafka topic name “driver_location” to capture driver’s current location.

You decide appropriate class names, modules, Rest API endpoints, DB schema.

Here is the docker compose file:

Project Components

With all the above information, we have enough to start building our project. Before we go ahead and start working on the assignment, I’m going to lay the broad idea and all the components that we will be working on.

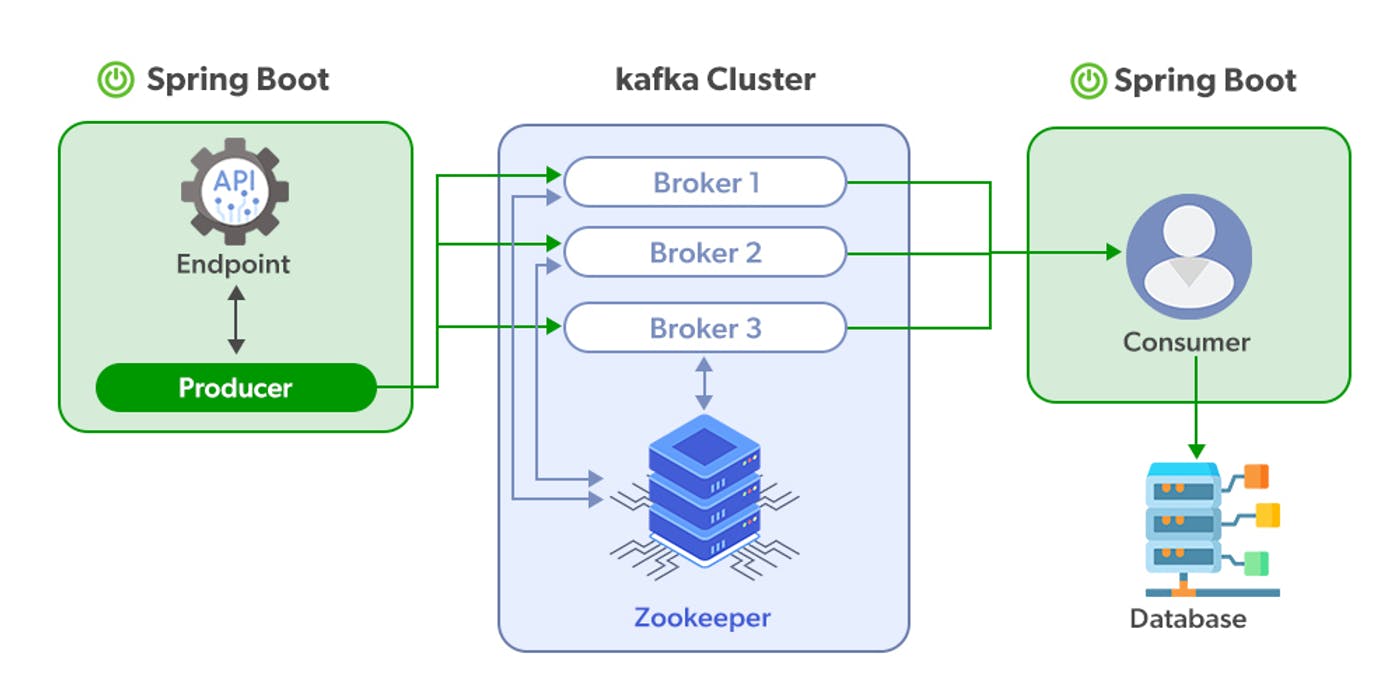

Kafka

Kafka will be playing a major role in this project, so it’s essential that we get a good understanding of it. Firstly, we will be creating a Producer which will be sending Events (aka messages/records) to a Cluster. The cluster will be holding a Driver information like a log. Next we will create a Consumer which will listen to a specified cluster for any real time events coming in. With this in mind, we will be creating two services: a Producer and Consumer.

Spring Boot

The Producer and Consumer will be a Spring Boot project with Maven. While working on this assignment, I wanted to learn a new language, which is Kotlin. I’ve been writing with Java since college, so I was getting a little bored with it. I saw this as a new opportunity to learn something new. Everything related to Kafka will be in Kotlin. The rest of the project will be in Java.

MySQL

This will be our main Database. We will mainly use this for Stores information. We will be using Spring Data JPA as the persistence technology.

Evaluation

Even though I am not submitting this project, the assignment had some pointers on how this would be evaluated. I think it is a good idea to keep this in mind to create the best possible product we can.

How efficiently you solve the given problem in terms of time/space complexity.

Latency of REST APIs.

Coding standards, JUnit coverage, REST compliance, structure of multimodule maven project, database design (for scale).

Ability to start the service in working condition just by running “docker-compose up” without any errors.

Conclusion

This project will be divided into parts, there’s will be a lot of information and code to cover. Also, I will be gradually updating my GitHub, so you can see the full project there, and I will be open to suggestions if you want to work along as well.